Copyright © 1997 by Rikk Carey and Gavin Bell

This chapter provides a detailed definition of the syntax and semantics of each node in the VRML specification. The nodes are listed in alphabetical order.

This chapter provides a detailed definition of the syntax and semantics of each node in VRML. Table 3-1 lists the topics in this chapter.

In this chapter, the first item in each section is the public interface specification for the node. This interface defines the names and types of the fields and events for the node, as well as the default values for the fields of the node. Note that this syntax is not the actual file format syntax. However, the parts of the interface that are identical to the file syntax are in bold. For example, the following defines the Collision node's public interface and file format:

Collision {

eventIn MFNode addChildren

eventIn MFNode removeChildren

exposedField MFNode children []

exposedField SFBool collide TRUE

field SFVec3f bboxCenter 0 0 0 # (- ,

, )

field SFVec3f bboxSize -1 -1 -1 # (0,

)

field SFVec3f bboxSize -1 -1 -1 # (0, ) or -1,-1,-1

field SFNode proxy NULL

eventOut SFTime collideTime

}

) or -1,-1,-1

field SFNode proxy NULL

eventOut SFTime collideTime

}

Note that the interface specification also includes the value ranges

for the node's fields and exposedFields (where appropriate).

Parentheses imply that the range bound is exclusive, while brackets

imply that the range value is inclusive. For example, a range of (-![]() ,1] defines the lower bound as -

,1] defines the lower bound as -![]() exclusively and the upper bound as 1

inclusively.

exclusively and the upper bound as 1

inclusively.

The fields and events contained within the public interface of node types are ordered as follows:

![]()

Anchor {

eventIn MFNode addChildren

eventIn MFNode removeChildren

exposedField MFNode children []

exposedField SFString description ""

exposedField MFString parameter []

exposedField MFString url []

field SFVec3f bboxCenter 0 0 0 # (- ,

, )

field SFVec3f bboxSize -1 -1 -1 # (0,

)

field SFVec3f bboxSize -1 -1 -1 # (0, ) or -1,-1,-1

}

) or -1,-1,-1

}

The Anchor grouping node retrieves the content of a URL when the user activates (e.g., clicks) some geometry contained within the Anchor node's children. If the URL points to a valid VRML file, that world replaces the world of which the Anchor node is a part (except when the parameter field, described below, alters this behaviour). If non-VRML data is retrieved, the browser shall determine how to handle that data; typically, it will be passed to an appropriate non-VRML browser.

The name Anchor comes from the HTML Anchor tag (<A HREF=...>), which is used to create hyperlinked text in HTML. It was called WWWAnchor in VRML 1.0, but the WWW was dropped.

Exactly how a user activates geometry contained by the Anchor node depends on the pointing device and is determined by the VRML browser. Typically, clicking with the pointing device will result in the new scene replacing the current scene. An Anchor node with an empty url does nothing when its children are chosen. A description of how multiple Anchors and pointing-device sensors are resolved on activation is contained in "2.6.7 Sensor nodes."

A description of children, addChildren, and removeChildren fields and eventIns may be found in "2.6.5 Grouping and children nodes."

The description field in the Anchor node specifies a textual description of the Anchor node. This may be used by browser-specific user interfaces that wish to present users with more detailed information about the Anchor.

The candidate Anchor is the Anchor with geometry that is underneath the pointing device. The pointing device is usually a mouse (or a mouse substitute like a trackball or touchpad).

The parameter exposed field may be used to supply any additional information to be interpreted by the VRML or HTML browser. Each string shall consist of "keyword=value" pairs. For example, some browsers allow the specification of a 'target' for a link to display a link in another part of the HTML document. The parameter field is then:

Anchor {

parameter [ "target=name_of_frame" ]

...

}

The parameter field was added to allow Anchors to bring up hyperlinks in other HTML frames on the same Web page. When VRML 2.0 was originally being designed, Netscape Navigator was the only HTML browser that supported multiple frames, so instead of adding a frame or target field just to support that feature, the more general parameter field was added to Anchor. That avoided adding any Netscape-specific features to VRML and allows for future additions.

An Anchor node may be used to bind the initial Viewpoint node in a world by specifying a URL ending with "#ViewpointName" where "ViewpointName" is the name of a viewpoint defined in the file. For example:

Anchor {

url "http://www.school.edu/vrml/someScene.wrl#OverView"

children Shape { geometry Box {} }

}

specifies an anchor that loads the file "someScene.wrl" and binds the initial user view to the Viewpoint node named "OverView" when the Anchor node's geometry (Box) is activated. If the named Viewpoint node is not found in the file, the file is loaded using the default Viewpoint node binding stack rules (see "3.53 Viewpoint").

If the url field only contains a "#ViewpointName" (i.e. no file name), the Viewpoint node named "ViewpointName" in the current world shall be bound (set_bind TRUE). See "3.53 Viewpoint" for the Viewpoint transition rules that specify how browsers shall interpret the transition from the old Viewpoint node to the new one. For example:

Anchor {

url "#Doorway"

children Shape { geometry Sphere {} }

}

binds the viewer to the viewpoint defined by the "Doorway" viewpoint in the current world when the sphere is activated. In this case, if the Viewpoint is not found, nothing is done on activation.

More details on the url field are contained in "2.5 VRML and the World Wide Web."

Since navigating around 3D worlds can be difficult, it is recommended that authors provide navigation assists whenever possible. The Anchor node serves as an excellent tool for creating simple guided tours or navigation aids in a 3D world. Place signposts or other recognizable objects (e.g., labeled buttons) throughout the world with Anchor nodes as parents, and define each Anchor to refer to a Viewpoint defined in the world (e.g., Anchor { url "#someViewpoint" ... }). Typically, there should be at least one visible signpost from every Viewpoint. This ensures that the user knows where to go after visiting each stop. When creating guided tours, authors should include backward and forward links at each signpost. Remember that VRML does not specify what happens during the transition to a Viewpoint and thus could perform a jump cut, an animated movement, or some other transitional effect. If an author wishes to control the transition precisely, then the only option is to use TouchSensors with Scripts programmed to bind and unbind Viewpoints, which are animated by PositionInterpolators and OrientationInterpolators. This is a much more complicated task than using the simple Anchor node.

The bboxCenter and bboxSize fields specify a bounding box that encloses the Anchor's children. This is a hint that may be used for optimization purposes. If the specified bounding box is smaller than the actual bounding box of the children at any time, the results are undefined. The default bboxSize value, (-1, -1, -1), implies that the bounding box is not specified and if needed must be calculated by the browser. A description of bboxCenter and bboxSize fields may be found in "2.6.4 Bounding boxes."

Anchor is equivalent to a prototype containing a couple of Group nodes, a Touch-Sensor, and a Script. It is a standard node partly because it makes it easier to convert VRML 1.0 files (which use WWWAnchor) to VRML 2.0, and partly because it is convenient to have simple hyperlinking support prepackaged in a convenient form.

There are many hyperlinking tasks for which Anchor is inadequate. For example, if you want a hyperlink to occur after the user has accomplished some task, then you must use a Script node that calls loadURL(). If you want to load several different pieces of information into several other frames you will also have to use a Script that makes several calls to loadURL(). The basic building blocks of Scripts and sensors allow you to do almost anything; the Anchor node is only meant to address the most basic hyperlinking tasks.

The following example illustrates typical use of the Anchor node. The first Anchor links the Box geometry to another VRML world that replaces this one after the Anchor is activated. The second Anchor links the Sphere to a Viewpoint in this world. When the user clicks on the Sphere, the browser's view is transported to the Viewpoint. The third Anchor links a Cone to a frame on an HTML page. When the user clicks on the Cone, the frame is activated:

#VRML V2.0 utf8

Group { children [

Transform {

translation -5 0 0

children Anchor {

url "http://www.barbie.web/~barbie/dollhouse.wrl"

description "Link to Barbie's Home Page pad"

children Shape {

geometry Box {}

appearance DEF A1 Appearance {

material Material {

diffuseColor 1 1 1

ambientIntensity 0.33

specularColor 1 1 1

shininess 0.5

}

}

}

}

}

Transform {

children Anchor {

url "#NiceView"

description "Link to a nice view in this scene"

children Shape { geometry Sphere {} appearance USE A1 }

}

}

Transform {

translation 5 0 0

children Anchor {

url "http://www.barbie.web/~barbie/index.html"

description "Link to frame in Barbie's home page"

parameter "target=name_of_frame"

children Shape {

geometry Cone {}

appearance USE A1 }

}

}

DEF NiceView Viewpoint {

position 0 0 -20

description "A Nice View"

}

]}

![]()

Appearance {

exposedField SFNode material NULL

exposedField SFNode texture NULL

exposedField SFNode textureTransform NULL

}

The Appearance node specifies the visual properties of geometry by defining the Material and texture nodes. The value for each of the fields in this node can be NULL. However, if the field is non-NULL, it shall contain one node of the appropriate type.

The material field, if specified, shall contain a Material node. If the material field is NULL or unspecified, lighting is off (all lights are ignored during rendering of the object that references this Appearance) and the unlit object colour is (1, 1, 1). Details of the VRML lighting model are in "2.14 Lighting model."

The texture field, if specified, shall contain one of the various types of texture nodes (ImageTexture, MovieTexture, or PixelTexture). If the texture node is NULL or the texture field is unspecified, the object that references this Appearance is not textured.

The textureTransform field, if specified, shall contain a TextureTransform node. If the texture field is NULL or unspecified, or if the textureTransform is NULL or unspecified, the textureTransform field has no effect.

Appearance nodes should be shared whenever possible. DEF the first use of an Appearance node in the file and USE it for all subsequent Shapes with identical appearance values. This can result in memory savings and performance gains (depending on the browser implementation).

If the world is large and Appearance nodes are frequently shared, it may be handy to create a separate VRML file that contains all of the Appearance nodes, each with a PROTO name (e.g., A1, A2, GOLD, Shiny_Red). In the world file that contains the Shape nodes, insert one EXTERNPROTO at the top of the file for each Appearance to be used and then use the EXTERNPROTO name in the Shape definition. For example, the following file is the Appearance library (AppearanceLibrary.wrl) defining the Appearances to be used by another VRML file:

#VRML V2.0 utf8

PROTO A1[] { Appearance {...} }

PROTO A2[] { Appearance {...} }

...

And here's how the Appearance library would be used:

#VRML V2.0 utf8

EXTERNPROTO A1 [] "AppearanceLibrary.wrl#A1" # List each one...

EXTERNPROTO A2 [] "AppearanceLibrary.wrl#A2"

...

Shape {

appearance A1 { }

...

}

Note that this scheme can be used for a variety of different node types (e.g., Material).

The following example illustrates typical use of the Appearance node (see Figure 3-1):

#VRML V2.0 utf8

Shape {

appearance Appearance {

material Material {

specularColor 1 1 1

shininess 0.2

}

texture ImageTexture { url "marble.gif" }

}

geometry Sphere { radius 1.3 }

}

Shape {

appearance Appearance {

material Material { diffuseColor 0.9 0.9 0.9 }

}

geometry Box {}

}

Background { skyColor 1 1 1 }

![]()

AudioClip {

exposedField SFString description ""

exposedField SFBool loop FALSE

exposedField SFFloat pitch 1.0 # (0, )

exposedField SFTime startTime 0 # (-

)

exposedField SFTime startTime 0 # (- ,

, )

exposedField SFTime stopTime 0 # (-

)

exposedField SFTime stopTime 0 # (- ,

, )

exposedField MFString url []

eventOut SFTime duration_changed

eventOut SFBool isActive

}

)

exposedField MFString url []

eventOut SFTime duration_changed

eventOut SFBool isActive

}

An AudioClip node specifies audio data that can be referenced by other nodes that require an audio source.

The Sound node is the only node in VRML 2.0 that uses an audio source, and the AudioClip node is specified in the Sound's source field.

The description field specifies a textual description of the audio source. A browser is not required to display the description field but may choose to do so in addition to playing the sound.

The url field specifies the URL from which the sound is loaded. Browsers shall support at least the wavefile format in uncompressed PCM format (see [WAV]), It is recommended that browsers also support the MIDI file type 1 sound format (see [MIDI]), MIDI files are presumed to use the General MIDI patch set. Section "2.5 VRML and the World Wide Web" contains details on the url field. Results are not defined when the URL references unsupported data types.

A very small number of formats are required or recommended by the VRML specification so that content creators can create worlds that should work with any VRML implementation. Several criteria are used to decide which audio (and movie and texture) formats VRML implementations should be required to support:

In the particular case of audio, uncompressed .wav files were chosen because they met all of these criteria. Several different forms of compression for .wav files are available, but at the time VRML 2.0 was being designed, none were available nor widely used on all platforms. MIDI is recommended as a very bandwidth-efficient way of transmitting musical information and complements the more general (but much larger) .wav format nicely.

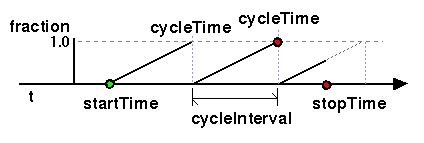

The loop, startTime, and stopTime exposedFields and the isActive eventOut, and their effects on the AudioClip node, are discussed in detail in "2.6.9 Time-dependent nodes." The "cycle" of an AudioClip is the length of time in seconds for one playing of the audio at the specified pitch.

The pitch field specifies a multiplier for the rate at which sampled sound is played. Only positive values shall be valid for pitch. A value of zero or less will produce undefined results. Changing the pitch field affects both the pitch and playback speed of a sound. A set_pitch event to an active AudioClip is ignored and no pitch_changed eventOut is generated. If pitch is set to 2.0, the sound shall be played one octave higher than normal and played twice as fast. For a sampled sound, the pitch field alters the sampling rate at which the sound is played. The proper implementation of pitch control for MIDI (or other note sequence sound clips) is to multiply the tempo of the playback by the pitch value and adjust the MIDI Coarse Tune and Fine Tune controls to achieve the proper pitch change.

There are a large number of parameters that can be used to alter an audio sound track. VRML97 allows only the pitch and volume (which is specified in the intensity field of the Sound node) to be modified. This gives the world creator a lot of flexibility with a minimal number of "knobs" to tweak, making implementation reasonably easy.

A duration_changed event is sent whenever there is a new value for the "normal" duration of the clip. Typically, this will only occur when the current url in use changes and the sound data has been loaded, indicating that the clip is playing a different sound source. The duration is the length of time in seconds for one cycle of the audio for a pitch set to 1.0. Changing the pitch field will not trigger a duration_changed event. A duration value of "-1" implies that the sound data has not yet loaded or the value is unavailable for some reason.

The isActive eventOut can be used by other nodes to determine if the clip is currently active. If an AudioClip is active, it shall be playing the sound corresponding to the sound time (i.e., in the sound's local time system with sample 0 at time 0):

t = (now - startTime) modulo (duration / pitch)

You can think of AudioClip as the sound-generation equipment, while the Sound node functions as the sound-emitting equipment. AudioClip has all of the controls for starting and stopping the sound, looping it, and so forth. The Sound node controls how the sound is emitted--what volume, where in space, and so on. A single AudioClip can be used with several different Sound nodes, just like a single tape player might be connected to several sets of speakers.

Be careful with how many audio tracks are playing simultaneously. Read the browser release notes carefully to discover how many tracks are supported simultaneously. It is generally safe to limit the number of audio tracks to two or three at one time. Use ProximitySensors and the min/maxFront and min/maxBack fields of the Sound node to localize sounds to nonoverlapping regions.

The following example creates two Sound nodes that employ AudioClip nodes. The first AudioClip is used for a repeating (loop TRUE) sound that emits from the center of the world. This example illustrates the case of a sound that is looping forever, starting when the user first enters the world. This is done by setting the loop field to TRUE and leaving the stopTime equal to the startTime (default for both is zero). The second AudioClip is issued whenever the user enters or exits the box defined by the ProximitySensor:

#VRML V2.0 utf8

Group { children [

Sound { # Looped midi soundtrack

source DEF AC1 AudioClip {

loop TRUE # Loop forever

url "doodoo.wav"

}

spatialize TRUE

minFront 0

maxFront 20

minBack 0

maxBack 20

}

Sound { # Chimes when user goes through space near origin

source DEF AC2 AudioClip { url "Chimes.wav" }

minFront 20

maxFront 100

minBack 20

maxBack 100

}

DEF PS ProximitySensor { center 0 5 0 size 10 10 10 }

Shape {

geometry Box { size 5 0.05 5 }

appearance Appearance { material Material {} }

}

Shape { # Floor

geometry IndexedFaceSet {

coord Coordinate {

point [ -50 0 -50, -50 0 50, 50 0 50, 50 0 -50 ]

}

coordIndex [ 0 1 2 3 ]

}

}

Viewpoint {

position 0 1 25

description "Outside sound ranges"

}

Viewpoint {

position 0 1 2

description "Inside sound ranges"

}

]}

# Sound bell when user enters/exits 10x10x10 space nr origin

ROUTE PS.enterTime TO AC2.set_startTime

ROUTE PS.exitTime TO AC2.set_startTime

![]()

Background {

eventIn SFBool set_bind

exposedField MFFloat groundAngle [] # [0, /2]

exposedfield MFColor groundColor [] # [0,1]

exposedField MFString backUrl []

exposedField MFString bottomUrl []

exposedField MFString frontUrl []

exposedField MFString leftUrl []

exposedField MFString rightUrl []

exposedField MFString topUrl []

exposedField MFFloat skyAngle [] # [0,

/2]

exposedfield MFColor groundColor [] # [0,1]

exposedField MFString backUrl []

exposedField MFString bottomUrl []

exposedField MFString frontUrl []

exposedField MFString leftUrl []

exposedField MFString rightUrl []

exposedField MFString topUrl []

exposedField MFFloat skyAngle [] # [0, ]

exposedField MFColor skyColor [ 0 0 0 ] # [0,1]

eventOut SFBool isBound

}

]

exposedField MFColor skyColor [ 0 0 0 ] # [0,1]

eventOut SFBool isBound

}

The Background node is used to specify a colour backdrop that simulates ground and sky, as well as a background texture, or panorama, that is placed behind all geometry in the scene and in front of the ground and sky. Background nodes are specified in the local coordinate system and are affected by the accumulated rotation of their ancestors as described below.

Background nodes are bindable nodes as described in "2.6.10 Bindable children nodes." There exists a Background stack, in which the top-most Background on the stack is the currently active Background. To move a Background to the top of the stack, a TRUE value is sent to the set_bind eventIn. Once active, the Background is then bound to the browsers view. A FALSE value sent to set_bind removes the Background from the stack and unbinds it from the browser's view. More details on the bind stack may be found in "2.6.10 Bindable children nodes."

The backdrop is conceptually a partial sphere (the ground) enclosed inside of a full sphere (the sky) in the local coordinate system with the viewer placed at the centre of the spheres. Both spheres have infinite radius (one epsilon apart) and each is painted with concentric circles of interpolated colour perpendicular to the local Y-axis of the sphere. The Background node is subject to the accumulated rotations of its ancestors' transformations. Scaling and translation transformations are ignored. The sky sphere is always slightly farther away from the viewer than the ground sphere causing the ground to appear in front of the sky in cases where they overlap.

The skyColor field specifies the colour of the sky at various

angles on the sky sphere. The first value of the skyColor field

specifies the colour of the sky at 0.0 radians representing the zenith

(i.e., straight up from the viewer). The skyAngle field

specifies the angles from the zenith in which concentric circles of

colour appear. The zenith of the sphere is implicitly defined to be 0.0

radians, the natural horizon is at ![]() /2 radians, and the nadir

(i.e., straight down from the viewer) is at

/2 radians, and the nadir

(i.e., straight down from the viewer) is at ![]() radians. skyAngle

is restricted to non-decreasing values in the range [0.0,

radians. skyAngle

is restricted to non-decreasing values in the range [0.0, ![]() ].

There must be one more skyColor value than there are skyAngle

values. The first colour value is the colour at the zenith, which is

not specified in the skyAngle field. If the last skyAngle

is less than pi, then the colour band between the last skyAngle

and the nadir is clamped to the last skyColor. The sky colour

is linearly interpolated between the specified skyColor values.

].

There must be one more skyColor value than there are skyAngle

values. The first colour value is the colour at the zenith, which is

not specified in the skyAngle field. If the last skyAngle

is less than pi, then the colour band between the last skyAngle

and the nadir is clamped to the last skyColor. The sky colour

is linearly interpolated between the specified skyColor values.

The groundColor field specifies the colour of the ground at the

various angles on the ground hemisphere. The first value of the groundColor

field specifies the colour of the ground at 0.0 radians representing

the nadir (i.e., straight down from the user). The groundAngle

field specifies the angles from the nadir that the concentric circles

of colour appear. The nadir of the sphere is implicitly defined at 0.0

radians. groundAngle is restricted to non-decreasing values in

the range [0.0, ![]() /2]. There must be one more groundColor

value than there are groundAngle values. The first colour value

is for the nadir which is not specified in the groundAngle

field. If the last groundAngle is less than

/2]. There must be one more groundColor

value than there are groundAngle values. The first colour value

is for the nadir which is not specified in the groundAngle

field. If the last groundAngle is less than ![]() /2 (usual),

the region between the last groundAngle and the equator is

invisible. The ground colour is linearly interpolated between the

specified groundColor values.

/2 (usual),

the region between the last groundAngle and the equator is

invisible. The ground colour is linearly interpolated between the

specified groundColor values.

The backUrl, bottomUrl, frontUrl, leftUrl, rightUrl, and topUrl fields specify a set of images that define a background panorama between the ground/sky backdrop and the scene's geometry. The panorama consists of six images, each of which is mapped onto a face of an infinitely large cube contained within the backdrop spheres and centred in the local coordinate system. The images are applied individually to each face of the cube. On the front, back, right, and left faces of the cube, when viewed from the origin looking down the negative Z-axis with the Y-axis as the view up direction, each image is mapped onto the corresponding face with the same orientation as if the image were displayed normally in 2D (backUrl to back face, frontUrl to front face, leftUrl to left face, and rightUrl to right face). On the top face of the cube, when viewed from the origin looking along the +Y-axis with the +Z-axis as the view up direction, the topUrl image is mapped onto the face with the same orientation as if the image were displayed normally in 2D. On the bottom face of the box, when viewed from the origin along the negative Y-axis with the negative Z-axis as the view up direction, the bottomUrl image is mapped onto the face with the same orientation as if the image were displayed normally in 2D.

Figure 3-2 illustrates the Background node backdrop and background textures.

Alpha values in the panorama images (i.e., two or four component images) specify that the panorama is semi-transparent or transparent in regions, allowing the groundColor and skyColor to be visible.

See "2.6.11 Texture maps" for a general description of texture maps.

Often, the bottomUrl and topUrl images will not be specified, to allow sky and ground to show. The other four images may depict surrounding mountains or other distant scenery. Browsers shall support the JPEG (see [JPEG]) and PNG (see [PNG]) image file formats, and in addition, may support any other image format (e.g. CGM) that can be rendered into a 2D image. Support for the GIF (see [GIF]) format is recommended (including transparency) . Details on the url fields may be found in "2.5 VRML and the World Wide Web."

Wasabi Software sells a wonderful tool for creating Background panoramas. Download and try SkyPaint to easily create beautiful backdrops for your VRML worlds.

The panorama URLs behave like ImageTexture nodes. It might have been nice to specify each as textures, instead of as URLs. That is, instead of MFString backURL, the Background node could have had an SFNode backTexture field that pointed to an ImageTexture, PixelTexture, or MovieTexture. This would have allowed animated backgrounds. However, this generalization was noticed too late in the VRML 2.0 definition process and only static backgrounds are supported (which is probably a good thing, since implementations might have trouble supporting animated backgrounds).

Panorama images may be one component (greyscale), two component (greyscale plus alpha), three component (full RGB colour), or four-component (full RGB colour plus alpha).

Ground colours, sky colours, and panoramic images do not translate with respect to the viewer, though they do rotate with respect to the viewer. That is, the viewer can never get any closer to the background, but can turn to examine all sides of the panorama cube, and can look up and down to see the concentric rings of ground and sky (if visible).

Remember that the panorama is rendered in front of the ground and sky. When using a panorama, the ground and sky should not be specified unless it is partially transparent, as a result of using two- or four-component images with transparency.

Background is not affected by Fog nodes. Therefore, if a Background node is active (i.e., bound) while a Fog node is active, then the Background node will be displayed with no fogging effects. It is the author's responsibility to set the Background values to match the Fog values (e.g., ground colours fade to fog colour with distance and panorama images tinted with fog colour).

The first Background node found during reading of the world is automatically bound (receives set_bind TRUE) and is used as the initial background when the world is loaded.

The default Background node is entirely black. If you just want simply to set a single color to be used for the background, insert a Background node into your scene with a single sky color that is the right color. Implementations should optimize for this case and clear the window to that color before drawing the scene.

The Background node provides functionality similar to Apple's QuickTimeVR with its panoramic images. The user can be restricted to one spot using a NavigationInfo that specifies a speed of navigation of 0.0, and can only turn to look at the background images that can give the illusion of a full 3D environment. By binding and unbinding Background nodes as the user clicks on TouchSensors or as Script nodes execute, the user can be given the illusion of moving through a 3D space when it is, in reality, a set of prerendered views.

The following syntax illustrates two typical examples of the Background node (see Figure 3-3). The first Background node specifies the sky and ground colors, but does not specify panoramic images. This typically results in faster rendering. A TouchSensor was added to the scene that is used to bind the second Background node when the user clicks and holds over the flagpole. The second Background node defines a panoramic image of the night sky. Note that since the panorama is completely opaque and is rendered in front of the ground and sky, there is no point in specifying ground or sky values. Since there is no ground plane geometry defined in the scene, binding the second Background creates an illusion of floating in space:

#VRML V2.0 utf8

Transform { children [

DEF B1 Background { # Gray ramped sky

skyColor [ 0 0 0, 1.0 1.0 1.0 ]

skyAngle 1.6

groundColor [ 1 1 1, 0.8 0.8 0.8, 0.2 0.2 0.2 ]

groundAngle [ 1.2, 1.57 ]

}

DEF B2 Background { # Night sky

backUrl "Bg.gif"

leftUrl "Bg.gif"

bottomUrl "Bg.gif"

frontUrl "Bg.gif"

rightUrl "Bg.gif"

topUrl "Bg.gif"

}

Transform { children [ # Click flag and hold to see Night sky

DEF TS TouchSensor {}

Shape { # Flag and flag-pole at origin

appearance DEF A Appearance { material Material {} }

geometry IndexedFaceSet {

coord Coordinate {

point [ -.1 0 -.1, 0 0 .1, .1 0 -.1,

-.1 3 -.1, 0 3 .1, .1 3 -.1,

.1 2.4 0, .1 2.9 0, -1.4 2.65 -.8 ]

}

coordIndex [ 0 1 4 3 -1 1 2 5 4 -1

2 0 3 5 -1 3 4 5 -1 6 7 8 ]

}

}

Shape { # Floor

appearance USE A

geometry IndexedFaceSet {

coord Coordinate { point [ -2 0 -2, -2 0 2,

2 0 2, 2 0 -2 ] }

coordIndex [ 0 1 2 3 ]

}

}

DirectionalLight { direction -0.707 -.707 0 intensity 1 }

]}

Viewpoint { position 0 1.5 10 }

]}

ROUTE TS.isActive TO B2.set_bind

![]()

Billboard {

eventIn MFNode addChildren

eventIn MFNode removeChildren

exposedField SFVec3f axisOfRotation 0 1 0 # (- ,

, )

exposedField MFNode children []

field SFVec3f bboxCenter 0 0 0 # (-

)

exposedField MFNode children []

field SFVec3f bboxCenter 0 0 0 # (- ,

, )

field SFVec3f bboxSize -1 -1 -1 # (0,

)

field SFVec3f bboxSize -1 -1 -1 # (0, ) or -1,-1,-1

}

) or -1,-1,-1

}

The Billboard node is a grouping node which modifies its coordinate system so that the Billboard node's local Z-axis turns to point at the viewer. The Billboard node has children which may be other children nodes.

The axisOfRotation field specifies which axis to use to perform the rotation. This axis is defined in the local coordinate system.

In general, the following steps described how to rotate the billboard to face the viewer:

A special case of billboarding is viewer-alignment. In this case, the object rotates to keep the billboard's local Y-axis parallel with the viewer's up vector. This special case is distinguished by setting the axisOfRotation to (0, 0, 0). The following steps describe how to align the billboard's Y-axis to the viewer's up vector:

Screen-aligned billboards are especially useful for labels that follow the viewer and are always readable. Typically, a Text node or ImageTexture would be parented by a Billboard node with axisOfRotation set to (0,0,0). See the following example.

When the axisOfRotation and the billboard-to-viewer line are coincident, the plane cannot be established and the resulting rotation of the billboard is undefined. For example, if the axisOfRotation is set to (0,1,0) (Y-axis) and the viewer flies over the billboard and peers directly down the Y-axis, the results are undefined.

Multiple instances of Billboard nodes (DEF/USE) operate as expected: each instance rotates in its unique coordinate system to face the viewer.

Section "2.6.5 Grouping and children nodes" provides a description of the children, addChildren, and removeChildren fields and eventIns.

The bboxCenter and bboxSize fields specify a bounding box that encloses the Billboard node's children. This is a hint that may be used for optimization purposes. If the specified bounding box is smaller than the actual bounding box of the children at any time, the results are undefined. A default bboxSize value, (-1, -1, -1), implies that the bounding box is not specified and if needed must be calculated by the browser. A description of the bboxCenter and bboxSize fields is contained in "2.6.4 Bounding boxes."

The Billboard node is really just a very fancy Transform node that modifies its own rotation based on the relationship between the Transform node and the user's view. In fact, a Billboard could be prototyped that way by combining a Transform node, a ProximitySensor to detect the user's view, and a Script to perform the necessary computations. However, Billboard transformations must be updated whenever the viewer moves, and it is much more efficient for the Billboard functionality to be built in to VRML implementations rather than left to Script nodes.

Billboards are often used with transparent textured rectangles to approximate 3D geometry with a 2D "cutout," also known as a sprite. If you have images of trees (with appropriate transparency values with the image), you might define a sprite prototype as

PROTO Sprite [ field MFString texture [ ] ]

{

Billboard {

axisOfRotation 0 1 0 # Rotate about Y (up) axis

children Shape {

appearance Appearance {

texture ImageTexture { url IS texture }

}

geometry IndexedFaceSet {

coord Coordinate {

point [ 0 0 0 1 0 0 1 1 0 0 1 0 ]

}

texCoord TextureCoordinate {

point [ 0 0 1 0 1 1 0 1 ]

}

coordIndex [ 0 1 2 3 -1 ]

}

}

}

}

then place several tree cutouts in your scene, like this:

Transform {

translation 13.4 0 55.0

children Sprite { texture "Oak.png" }

}

Transform {

translation -14.92 0 23

children Sprite { texture "Maple.png" }

}

Objects defined like this may be much faster both to create and to display than objects defined using a lot of polygons.

The following example illustrates typical use of the Billboard node (see Figure 3-5). The first Billboard defines a tree by specifying a four-component image texture that billboards about its Y-axis. This is one of the most typical uses of Billboard. The second Billboard node is almost identical to the first, but billboards around its X-axis. The third Billboard node illustrates the use of the screen-aligned billboard by setting the axisOfRotation field to (0,0,0):

#VRML V2.0 utf8

Transform { children [

Transform {

translation 5 0 0

children DEF TREE Billboard { # Billboard about Y-axis

children DEF S Shape {

geometry IndexedFaceSet {

coord Coordinate {

point [ -2 0 0, 2 0 0, 2 5 0, -2 5 0 ]

}

texCoord TextureCoordinate {

point [ 0 0, 1 0, 1 1, 0 1 ]

}

coordIndex [ 0 1 2 3 ]

}

appearance Appearance {

texture ImageTexture { url "Tree.gif" }

}

}

}

}

Transform {

translation -6 0 -1

children Billboard { # Billboard about X-axis

axisOfRotation 1 0 0

children USE S

}

}

Transform { # Screen-aligned label for flag-pole

translation 0 3.3 0

children Billboard {

axisOfRotation 0 0 0

children Shape {

geometry Text {

string "Top of flag pole"

fontStyle FontStyle { size 0.5 }

}

appearance Appearance {

material Material { diffuseColor 0 0 0 }

}

}

}

}

Billboard { # Flagpole at origin

axisOfRotation 0 1 0

children Shape {

appearance DEF A Appearance { material Material {} }

geometry IndexedFaceSet {

coord Coordinate {

point [ -.1 0 -.1, 0 0 .1, .1 0 -.1,

-.1 3 -.1, 0 3 .1, .1 3 -.1,

.1 2.4 0, .1 2.9 0, -1.4 2.65 -.8 ]

}

coordIndex [ 0 1 4 3 -1 1 2 5 4 -1

2 0 3 5 -1 3 4 5 -1 6 7 8 ]

}

}

}

Shape { # Floor

appearance Appearance {

texture ImageTexture { url "marble.gif" }

}

geometry IndexedFaceSet {

coord Coordinate {

point [ -50 0 -50, -50 0 50, 50 0 50, 50 0 -50 ]

}

coordIndex [ 0 1 2 3 ]

}

}

DirectionalLight { direction 0 1 0 }

Viewpoint { position 0 1.5 10 }

Background { skyColor 1 1 1 }

]}

![]()

Box {

field SFVec3f size 2 2 2 # (0,  )

}

)

}

The Box node specifies a rectangular parallelepiped box centred at (0, 0, 0) in the local coordinate system and aligned with the local coordinate axes. By default, the box measures 2 units in each dimension, from -1 to +1. The Box node's size field specifies the extents of the box along the X-, Y-, and Z-axes respectively and each component value must be greater than 0.0. Figure 3-6 illustrates the Box node.

Textures are applied individually to each face of the box. On the front (+Z), back (-Z), right (+X), and left (-X) faces of the box, when viewed from the outside with the +Y-axis up, the texture is mapped onto each face with the same orientation as if the image were displayed normally in 2D. On the top face of the box (+Y), when viewed from above and looking down the Y-axis toward the origin with the -Z-axis as the view up direction, the texture is mapped onto the face with the same orientation as if the image were displayed normally in 2D. On the bottom face of the box (-Y), when viewed from below looking up the Y-axis toward the origin with the +Z-axis as the view up direction, the texture is mapped onto the face with the same orientation as if the image were displayed normally in 2D. TextureTransform affects the texture coordinates of the Box.

The Box node's geometry requires outside faces only. When viewed from the inside the results are undefined.

Box nodes are specified in the geometry field of a Shape node; they may not be children of a Transform or Group node.

Box was called Cube in VRML 1.0 (which was a misnomer because its width, height, and depth could be varied). Implementations usually draw boxes as 12 triangles (you should keep this in mind if you are tempted to create a scene that contains 1,000 boxes). If you can, instead, create the same scene using fewer than 12,000 triangles in an IndexedFaceSet, you should use the IndexedFaceSet.

The size field of Box is not exposed and so cannot change once the Box has been created. This was done to make very efficient, lightweight implementations possible.

To change the size of a Box node after it is created, use a Script node that sends changes to the Transform node that parents the Shape containing the Box:

DEF BoxTransform Transform {

children Shape {

geometry Box { size 3 4 2 } # initial box size

}

}

...

DEF BoxScaler Script {

eventIn ... # An event triggers the change.

eventOut SFVec3f scale # Output that changes the Box's size.

url "..." # Script that computes scale values.

}

ROUTE BoxScaler.scale TO BoxTransform.scale

The following example illustrates the use of the Box node (see Figure 3-7). Note the default mapping of the texture on the faces of the box:

#VRML V2.0 utf8

Transform { children [

Shape {

geometry Box { }

appearance Appearance {

material Material { diffuseColor 1 1 1 }

texture ImageTexture { url "marble2.gif" }

}

}

Shape {

geometry Box { size 1 1 3 }

appearance Appearance {

material Material { diffuseColor 0.8 0.8 0.8 }

}

}

Shape {

geometry Box { size 3 1 1 }

appearance Appearance {

material Material { diffuseColor 0.6 0.6 0.6 }

}

}

Shape {

geometry Box { size 1 3 1 }

appearance Appearance {

material Material { diffuseColor 1 1 1 }

}

}

NavigationInfo { type "EXAMINE" }

Background { skyColor 1 1 1 }

]}

![]()

Collision {

eventIn MFNode addChildren

eventIn MFNode removeChildren

exposedField MFNode children []

exposedField SFBool collide TRUE

field SFVec3f bboxCenter 0 0 0 # (- ,

, )

field SFVec3f bboxSize -1 -1 -1 # (0,

)

field SFVec3f bboxSize -1 -1 -1 # (0, ) or -1,-1,-1

field SFNode proxy NULL

eventOut SFTime collideTime

}

) or -1,-1,-1

field SFNode proxy NULL

eventOut SFTime collideTime

}

The Collision node is a grouping node that specifies the collision detection properties for its children (and their descendants), specifies surrogate objects that replace its children during collision detection, and sends events signaling that a collision has occurred between the user's avatar and the Collision node's geometry or surrogate. By default, all geometric nodes in the scene are collidable with the viewer except IndexedLineSet, PointSet, and Text. Browsers shall detect geometric collisions between the user's avatar (see NavigationInfo) and the scene's geometry, and prevent the avatar from 'entering' the geometry.

If there are no Collision nodes specified in a scene, browsers shall detect collision with all objects during navigation.

Section "2.6.5 Grouping and children nodes" contains a description of the children, addChildren, and removeChildren fields and eventIns.

The Collision node's collide field enables and disables collision detection. If collide is set to FALSE, the children and all descendants of the Collision node shall not be checked for collision, even though they are drawn. This includes any descendent Collision nodes that have collide set to TRUE (i.e., setting collide to FALSE turns collision off for every node below it).

Collision nodes with the collide field set to TRUE detect the nearest collision with their descendent geometry (or proxies). Not all geometry is collidable. Each geometry node specifies its own collision characteristics. When the nearest collision is detected, the collided Collision node sends the time of the collision through its collideTime eventOut. This behaviour is recursive. If a Collision node contains a child, descendant, or proxy (see below) that is a Collision node, and both Collision nodes detect that a collision has occurred, both send a collideTime event at the same time.

The geometries that are not capable of colliding are IndexedLineSet, PointSet, and Text. Detecting collisions between 2D or 1D geometries and the 3D viewer is difficult, so they are defined to be transparent to collisions. If this is a problem, a proxy geometry (discussed later) can be specified for each IndexedLineSet, PointSet, and Text.

Surface properties (e.g., transparent textures or materials) have no affect on collisions. This isn't very realistic, but it can be very useful and makes implementation of Collision much easier. Again, Collision proxy geometry may be used if you want collision testing to match a partially transparent geometry.

The bboxCenter and bboxSize fields specify a bounding box that encloses the Collision node's children. This is a hint that may be used for optimization purposes. If the specified bounding box is smaller than the actual bounding box of the children at any time, the results are undefined. A default bboxSize value, (-1, -1, -1), implies that the bounding box is not specified and if needed must be calculated by the browser. A description of the bboxCenter and bboxSize fields may be found in "2.6.4 Bounding boxes".

The collision proxy, defined in the proxy field, is any legal children node as described in "2.6.5 Grouping and children nodes" that is used as a substitute for the Collision node's children during collision detection. The proxy is used strictly for collision detection; it is not drawn.

If the value of the collide field is FALSE, collision detection is not performed with the children or proxy descendent nodes. If the root node of a scene is a Collision node with the collide field set to FALSE, collision detection is disabled for the entire scene regardless of whether descendent Collision nodes have set collide TRUE.

If the value of the collide field is TRUE and the proxy field is non-NULL, the proxy field defines the scene on which collision detection is performed. If the proxy value is NULL, collision detection is performed against the children of the Collision node.

If proxy is specified, any descendent children of the Collision node are ignored during collision detection. If children is empty, collide is TRUE, and proxy is specified, collision detection is performed against the proxy but nothing is displayed. In this manner, invisible collision objects may be supported.

Navigating in 3D worlds can often be difficult. Whenever possible, use the Collision node with a simple, invisible proxy geometry (e.g., a force field) to constrain the avatar navigation to the regions of the world that are intended to be navigated (and to increase performance of collision detection). This technique avoids avatars from getting "stuck" in tight spots, wandering around aimlessly, or investigating portions of the scene that are not intended to be seen. Combining this with Anchors for guided tours or reference points can greatly improve world usability. When using invisible Collision objects to constrain avatars, it is recommended that a sound effect be issued on collision with the invisible geometry so that the user receives some extra feedback that the "force field" exists (route the collideTime eventOut from the Collision node to a Sound node's AudioClip startTime).

The collideTime eventOut generates an event specifying the time when the user's avatar (see NavigationInfo) intersects the collidable children or proxy of the Collision node. An ideal implementation computes the exact time of intersection. Implementations may approximate the ideal by sampling the positions of collidable objects and the user. The NavigationInfo node contains additional information for parameters that control the user's size.

There is no support for object/object collision in ISO/IEC 14772-1.

A navigation type of NONE (see the NavigationInfo node) implies that the world author is controlling all navigation, in which case the world author can use a Collision node to detect and respond to collisions.

Note that the Collision node only handles collisions between the user and the world; it does not detect collisions between arbitrary objects in the world. General, object-to-object collision detection is not specified in VRML.

Collision detection and terrain following are often confused. Terrain following means keeping the viewer's feet on the ground and is a function of the VRML browser's user interface. The avatarSize field of the NavigationInfo node can be used to control the viewer's height above the terrain, and browsers may decide to treat objects that are invisible to collisions as also being invisible to terrain-following calculations.

The following example illustrates several uses of the Collision node. Note the use of the invisible proxy to restrict avatar navigation in the second room:

#VRML V2.0 utf8

Group { children [

Collision { children [ # 1st room - collidable

Shape {

appearance DEF WHITE Appearance {

material DEF M Material {

diffuseColor 1 1 1

ambientIntensity .33

}

}

geometry Extrusion {

crossSection [ 23 -17, 20 -17, 20 -30, 0 -30,

0 0, 20 0, 20 -13, 23 -13 ]

spine [ 0 0 0, 0 3 0 ]

ccw FALSE

}

}

Transform { translation 5 1 -24 # Cone in the 1st room

children Collision {

proxy DEF BBOX Shape { geometry Box{} }

children DEF CONE Shape { geometry Cone {} }

}}

Transform { translation 15 0.3 -26 # Sphere in 1st room

children Collision {

proxy USE BBOX

children DEF SPHERE Shape {

geometry Sphere {}

}}}

Transform { translation 15 0.3 -5 # Box in the 1st room

children Collision {

proxy USE BBOX

children DEF BOX Shape { geometry Box {} }

}}

]} # end of first room

Collision { # Second room - uses proxy

proxy Shape {

geometry Extrusion {

crossSection [ 23 -17, 40 -25, 40 -5, 23 -13 ]

spine [ 0 0 0, 0 3 0 ]

}

}

children [ # These children will not be collided w/

Shape { # 2nd room

appearance USE WHITE

geometry Extrusion {

crossSection [ 23 -17, 23 -30, 43 -30, 43 0,

23 0, 23 -13 ]

spine [ 0 0 0, 0 3 0 ]

}

}

Transform {

translation 25 1 -24

children USE CONE

}

Transform {

translation 40 0.3 -2

children USE SPHERE

}

Transform {

translation 40 0.3 -28

children USE BOX

}

]

}

Collision { # Translucent force field - no collision

collide FALSE

children Shape {

geometry Extrusion {

crossSection [ 21.5 -17, 21.5 -13 ]

spine [ 0 0.2 0, 0 2.5 0 ]

solid FALSE

}}}

Viewpoint { position 3.0 1.6 -2 }

PointLight { location 22 20 -15 radius 20 }

]}

![]()

Color {

exposedField MFColor color [] # [0,1]

}

This node defines a set of RGB colours to be used in the fields of another node.

Color nodes are only used to specify multiple colours for a single geometric shape, such as a colours for the faces or vertices of an IndexedFaceSet. A Material node is used to specify the overall material parameters of lit geometry. If both a Material and a Color node are specified for a geometric shape, the colours shall replace the diffuse component of the material.

Using the Color node to specify colors per vertex of IndexedFaceSet nodes is a very efficient and effective alternative to texture mapping. If designed properly, color per vertex can produce rich lighting and color effects. Typically, color-per-vertex rendering is much faster than texture mapping and is thus worth the effort. Note, however, that some browsers do not support color-per-vertex rendering; verify that it is supported before using this feature.

Textures take precedence over colours; specifying both a Texture and a Color node for geometric shape will result in the Color node being ignored. Details on lighting equations are described in "2.14 Lighting model."

Color nodes are specified in the color field of ElevationGrid, IndexedFaceSet, IndexedLineSet, or PointSet nodes.

A Color node can function as a general color map for IndexedFaceSet and IndexedLineSet nodes. You simply DEF the Color node and USE it repeatedly, using the indexing feature of IndexedFaceSet or IndexedLineSet to refer to colors by index rather than by absolute RGB value. If you are translating from an application that only supports a limited (e.g., 256-color) color palette, then this technique can make the resulting VRML files much smaller than respecifying the RGB colors over and over.

The following example illustrates the use of the Color node in conjunction with the IndexedFaceSet node (see Figure 3-9). The first IndexedFaceSet uses a Color node that specifies two colors: black (0,0,0) and white (1,1,1). Each vertex of each face of the IndexedFaceSet is assigned one of these two colors by the colorIndex field of the IndexedFaceSet. The second IndexedFaceSet/Color is almost identical, but does not specify a colorIndex field in the IndexedFaceSet and thus relies on the coordIndex field to assign colors (see IndexedFaceSet). The third IndexedFaceSet/Color applies color to each face of the IndexedFaceSet by setting colorPerVertex FALSE and specifying colorIndex for each face.

#VRML V2.0 utf8

Group { children [

Transform {

translation -3 0 0

children Shape {

appearance DEF A1 Appearance { material Material {} }

geometry IndexedFaceSet {

coord DEF C1 Coordinate {

point [ 1 0 1, 1 0 -1, -1 0 -1, -1 0 1, 0 3 0 ]

}

coordIndex [ 0 1 4 -1 1 2 4 -1 2 3 4 -1 3 0 4 ]

color Color { color [ 0 0 0, 1 1 1 ] }

colorIndex [ 0 0 1 -1 0 0 1 -1 0 0 1 -1 0 0 1 ]

}

}

}

Transform {

children Shape {

appearance USE A1

geometry IndexedFaceSet {

# uses coordIndex for colorIndex

coord USE C1

coordIndex [ 0 1 4 -1 1 2 4 -1 2 3 4 -1 3 0 4 ]

color Color { color [ 1 1 1, 1 1 1, 1 1 1, 1 1 1, 0 0 0 ]}

}

}

}

Transform {

translation 3 0 0

children Shape {

appearance USE A1

geometry IndexedFaceSet {

coord USE C1

coordIndex [ 0 1 4 -1 1 2 4 -1 2 3 4 -1 3 0 4 ]

color Color { color [ 0 0 0, 1 1 1 ] }

colorIndex [ 0, 1, 0, 1 ] # alt every other face

colorPerVertex FALSE

}

}

}

Background { skyColor 1 1 1 }

]}

![]()

ColorInterpolator {

eventIn SFFloat set_fraction # (- ,

, )

exposedField MFFloat key [] # (-

)

exposedField MFFloat key [] # (- ,

, )

exposedField MFColor keyValue [] # [0,1]

eventOut SFColor value_changed

}

)

exposedField MFColor keyValue [] # [0,1]

eventOut SFColor value_changed

}

This node interpolates among a set of MFColor key values to produce an SFColor (RGB) value_changed event. The number of colours in the keyValue field shall be equal to the number of keyframes in the key field. The keyValue field and value_changed events are defined in RGB colour space. A linear interpolation using the value of set_fraction as input is performed in HSV space (see [FOLE] for description of RGB and HSV colour spaces). Results are undefined when interpolating between two consecutive keys with complementary hues.

Section "2.6.8 Interpolators" contains a detailed discussion of interpolators.

The ColorInterpolator outputs an SFColor, suitable for use in any of the color fields of a Material node (diffuseColor, specularColor, emissiveColor). Unfortunately, a ColorInterpolator cannot be used to interpolate multiple colors (it does not generate an MFColor output) and so cannot be used with a Color node. If you do need to change the colors in a Color node, you will have to write a Script that does the appropriate calculations.

Defining the keys in RGB space but doing the interpolation in HSV space may seem somewhat strange. If the key values are very close together, then the differences between the two spaces are minimal. However, if there are large differences between the keys, then doing the interpolation in HSV space gives better perceptual results, since interpolating between two keys with the same intensity will not result in any intensity changes. That isn't true of RGB space: Interpolate from full-intensity red (1,0,0) to full-intensity green (0,1,0) and halfway you'll get half-intensity yellow (0.5,0.5,0).

The following example illustrates the use of the ColorInterpolator node. An infinitely looping TimeSensor is routed to a ColorInterpolator that is routed to the diffuseColor of a Material that is coloring the Box, Sphere, and Cone:

#VRML V2.0 utf8

Transform { children [

Transform {

translation -4 0 0

children Shape {

geometry Box {}

appearance DEF A Appearance {

material DEF M Material { diffuseColor .8 .2 .2 }

}

}

}

Transform {

translation 0 0 0

children Shape { geometry Sphere {} appearance USE A }

}

Transform {

translation 4 0 0

children Shape { geometry Cone {} appearance USE A }

}

NavigationInfo { type "EXAMINE" }

]}

DEF CI ColorInterpolator {

key [ 0 .2 .4 .6 .8 1 ]

keyValue [ .8 .2 .2, .2 .8 .2, .2 .2 .8, .8 .8 .8,

1 0 1, .8 .2 .2 ]

}

DEF TS TimeSensor { loop TRUE cycleInterval 5 }

ROUTE TS.fraction_changed TO CI.set_fraction

ROUTE CI.value_changed TO M.set_diffuseColor

![]()

Cone {

field SFFloat bottomRadius 1 # (0, )

field SFFloat height 2 # (0,

)

field SFFloat height 2 # (0, )

field SFBool side TRUE

field SFBool bottom TRUE

}

)

field SFBool side TRUE

field SFBool bottom TRUE

}

The Cone node specifies a cone which is centred in the local coordinate system and whose central axis is aligned with the local Y-axis. The bottomRadius field specifies the radius of the cone's base, and the height field specifies the height of the cone from the centre of the base to the apex. By default, the cone has a radius of 1.0 at the bottom and a height of 2.0, with its apex at y = height/2 and its bottom at y = -height/2. Both bottomRadius and height must be greater than 0.0. Figure 3-10 illustrates the Cone node.

The side field specifies whether sides of the cone are created and the bottom field specifies whether the bottom cap of the cone is created. A value of TRUE specifies that this part of the cone exists, while a value of FALSE specifies that this part does not exist (not rendered or eligible for collision or sensor intersection tests).

When a texture is applied to the sides of the cone, the texture wraps counterclockwise (from above) starting at the back of the cone. The texture has a vertical seam at the back in the X=0 plane, from the apex (0, height/2, 0) to the point (0, -height/2, -bottomRadius). For the bottom cap, a circle is cut out of the texture square centred at (0, -height/2, 0) with dimensions (2 × bottomRadius) by (2 × bottomRadius). The bottom cap texture appears right side up when the top of the cone is rotated towards the -Z-axis. TextureTransform affects the texture coordinates of the Cone.

The Cone geometry requires outside faces only. When viewed from the inside the results are undefined.

Cone nodes are specified in the geometry field of a Shape node; they may not be children of a Transform or Group node.

The VRML 1.0 version of the Cone was almost exactly the same. The only difference is the specification of the cone parts. VRML 1.0 has a special SFBitmask field for specifying a set of bits. One of the simplifications done in VRML 2.0 was removing that field type, since the same results can be achieved using multiple SFBool fields. So, the VRML 1.0 Cone's parts SFEnum field becomes the side and bottom SFBool fields.

Like the rest of the geometry primitives (Box, Sphere, and Cylinder), none of the fields of Cone are exposed, allowing very lightweight, efficient implementations. If you need to change the size of a cone, you must modify a parent Transform node's scale field. If you want to turn the parts of a Cone on and off, you must either simulate that by using a Switch node containing several Cone Shapes, or you must remove the Cone from its Shape (effectively deleting it) and replace it with a newly created Cone.

The following example illustrates the use of the Cone node (see Figure 3-11). The first cone sits on top of the second cone. Note the default texture map orientation as seen in the second Cone:

#VRML V2.0 utf8

Transform { children [

Transform {

translation 0 2.0 0 # sit on top of other Cone

children Transform {

translation 0 -1 0

children Shape {

geometry Cone { bottomRadius 2 height 1 }

appearance Appearance {

material Material { diffuseColor 1 1 1 }

}

}

}

}

Transform {

translation 0 1 0 # sit on y=0

children Transform {

translation 0 -1 0

children Shape {

geometry Cone { bottomRadius 2 height 4 bottom FALSE }

appearance Appearance {

material Material { diffuseColor 1.0 1.0 1.0 }

texture ImageTexture { url "marble2.gif" }

}

}

}

}

DirectionalLight { direction -.5 -0.5 .6 }

Background { skyColor 1 1 1 }

NavigationInfo { type "EXAMINE" }

]}

![]()

Coordinate {

exposedField MFVec3f point [] # (- ,

, )

}

)

}

This node defines a set of 3D coordinates to be used in the coord field of vertex-based geometry nodes including IndexedFaceSet, IndexedLineSet, and PointSet.

The VRML 1.0 term for the Coordinate node is Coordinate3. The "3" was originally added in case support for 2D coordinates was added. It was dropped because the VRML 2.0 naming philosophy is to give each node the most obvious name and not try to predict how the specification will change in the future. If carried out to its logical extreme, then a philosophy of planning for future extensions might give Coordinate the name CartesianCoordinate3Float, since support for polar or spherical coordinates might possibly be added in the future, as might double-precision or integer coordinates.

See IndexedFaceSet, IndexedLineSet, and PointSet for examples of the Coordinate node.

![]()

CoordinateInterpolator {

eventIn SFFloat set_fraction # (- ,

, )

exposedField MFFloat key [] # (-

)

exposedField MFFloat key [] # (- ,

, )

exposedField MFVec3f keyValue [] # (-

)

exposedField MFVec3f keyValue [] # (- ,

, )

eventOut MFVec3f value_changed

}

)

eventOut MFVec3f value_changed

}

This node linearly interpolates among a set of MFVec3f values. The number of coordinates in the keyValue field shall be an integer multiple of the number of keyframes in the key field. That integer multiple defines how many coordinates will be contained in the value_changed events.

Section "2.6.8 Interpolators" contains a more detailed discussion of interpolators.

Remember that TimeSensor outputs fraction_changed events in the 0.0 to 1.0 range, and that interpolator nodes routed from TimeSensors should restrict their key field values to the 0.0 to 1.0 range to match the TimeSensor output and thus produce a full interpolation sequence.

The CoordinateInterpolator was near the edge of the "cut line" for what features should be included in VRML 2.0 and what features should be left out. The following pros and cons influenced the decision and should give you an idea of how decisions were made on which features should be part of the specification.

Con: There is a strong desire to keep the VRML specification as small as possible. A big, bloated specification is hard to implement, hard for which to write conformance tests, takes a very long time to create, and encourages incompatible, partial implementations.

Pro: Coordinate morphing is a feature that many people requested. VRML 2.0 was designed "in the open." Drafts of the specification were constantly made available on the WWW; polls were taken on general, high-level design issues; and there were constant discussions and debates on the www-vrml mailing list. This provided invaluable information that helped prioritize decisions about what should be included and excluded, and provided time for unpopular decisions to be either justified or reversed.

Con: CoordinateInterpolator functionality can be accomplished with a Script node. Features that are not "fundamental" (that can be implemented using other features of the specification) were likely to be cut.

Pro: CoordinateInterpolator calculations can require a lot of computing power. Interpolating hundreds or thousands of coordinates is computationally expensive compared to interpolating a single translation or rotation. Making CoordinateInterpolator a standard node encourages highly optimized implementations, which will be much faster than a Script node equivalent.

Con: Implementing shapes with coordinates that may change over time can be difficult. Many interactive rendering libraries are optimized for the display of scenes made up of rigid-body objects, assuming that not many objects will change shape. Changing coordinates also requires that normals be regenerated (if explicit normals are not specified), which is also a fairly expensive operation. Adding CoordinateInterpolator to the specification encourages world creators to use a feature that might result in poor performance on many machines.

In the end, the positives outweighed the negatives, but it was not an easy decision and several other possible interpolators did not make the cut (there is no TextureCoordinateInterpolator because there isn't a strong enough demand for it, for example).

The following example illustrates a typical use of the CoordinateInterpolator node (see Figure 3-12). A TouchSensor is routed to a TimeSensor that fires the CoordinateInterpolator:

#VRML V2.0 utf8

Group {

children [

DEF CI CoordinateInterpolator {

key [ 0.0, 1.0 ]

keyValue [ 1 0 -1, -1 0 -1, 0 0 1, 0 0.5 0,

keyValue [ 1 0 -1, -1 0 -1, 0 0 1, 0 3.0 0 ]

}

Shape {

geometry IndexedFaceSet {

coord DEF C Coordinate {

point [ 1 0 -1, -1 0 -1, 0 0 1, 0 0.5 0 ]

}

coordIndex [ 0 1 3 -1 1 2 3 -1 2 0 3 ]

}

appearance Appearance { material Material {} }

}

DEF T TouchSensor {} # Click to start the morph

DEF TS TimeSensor { # Drives the interpolator

cycleInterval 3.0 # 3 second morph

loop TRUE

}

Background { skyColor 1 1 1 }

]

}

ROUTE CI.value_changed TO C.point

ROUTE T.touchTime TO TS.startTime

ROUTE TS.fraction_changed TO CI.set_fraction

![]()

Cylinder {

field SFBool bottom TRUE

field SFFloat height 2 # (0, )

field SFFloat radius 1 # (0,

)

field SFFloat radius 1 # (0, )

field SFBool side TRUE

field SFBool top TRUE

}

)

field SFBool side TRUE

field SFBool top TRUE

}

The Cylinder node specifies a capped cylinder centred at (0,0,0) in the local coordinate system and with a central axis oriented along the local Y-axis. By default, the cylinder is sized at "-1" to "+1" in all three dimensions. The radius field specifies the radius of the cylinder and the height field specifies the height of the cylinder along the central axis. Both radius and height shall be greater than 0.0. Figure 3.13 illustrates the Cylinder node.

The cylinder has three parts: the side, the top (Y = +height/2) and the bottom (Y = -height/2). Each part has an associated SFBool field that indicates whether the part exists (TRUE) or does not exist (FALSE). Parts which do not exist are not rendered and not eligible for intersection tests (e.g., collision detection or sensor activation).

When a texture is applied to a cylinder, it is applied differently to the sides, top, and bottom. On the sides, the texture wraps counterclockwise (from above) starting at the back of the cylinder. The texture has a vertical seam at the back, intersecting the X=0 plane. For the top and bottom caps, a circle is cut out of the unit texture squares centred at (0, +/- height/2, 0) with dimensions 2 × radius by 2 × radius. The top texture appears right side up when the top of the cylinder is tilted toward the +Z-axis, and the bottom texture appears right side up when the top of the cylinder is tilted toward the -Z-axis. TextureTransform affects the texture coordinates of the Cylinder node.

The Cylinder node's geometry requires outside faces only. When viewed from the inside the results are undefined.

Cylinder nodes are specified in the geometry field of a Shape node; they may not be children of a Transform or Group node.

VRML 1.0 allowed the application of separate materials to each of the parts of the cylinder. That feature was removed because it was rarely used and because removing it simplified both the Cylinder node and the Material node (which was constrained to containing only one material definition). To accomplish the equivalent functionality with VRML 2.0, you must define three separate cylinder shapes, each with a different part and a different material. This is a more general mechanism, allowing each part to have a different texture or material.

The following example illustrates use of the Cylinder node (see Figure 3-14). Note the default orientation of the texture map on the cylinder sides and caps:

#VRML V2.0 utf8

Group { children [

DEF C1 Shape {

appearance Appearance {

material DEF M1 Material {

diffuseColor 1 1 1

specularColor 1 1 1

shininess .9

texture ImageTexture { url "marble2.gif" }

}

geometry Cylinder { radius 1 height 5.0 }

}

Transform {

translation 0 1 0

rotation 0 0 1 1.571

children Shape {

appearance DEF A1 Appearance { material USE M1 }

geometry Cylinder { radius 0.5 height 4.0 }

}

}

Transform {

translation 0 -2.5 0

children DEF C2 Shape {

appearance USE A1

geometry Cylinder { radius 1.5 height 0.5 }

}

}

Transform {

translation 0 1 0

rotation 0 0 1 1.571

scale 0.25 1.5 1

children USE C1

}

Transform {

translation 0 2.5 0

scale 0.75 0.5 0.75

children USE C2

}

Background { skyColor 1 1 1 }

NavigationInfo { type "EXAMINE" }

]}

![]()

CylinderSensor {

exposedField SFBool autoOffset TRUE

exposedField SFFloat diskAngle 0.262 # (0, /2)

exposedField SFBool enabled TRUE

exposedField SFFloat maxAngle -1 # [-2

/2)

exposedField SFBool enabled TRUE

exposedField SFFloat maxAngle -1 # [-2 ,2

,2 ]

exposedField SFFloat minAngle 0 # [-2

]

exposedField SFFloat minAngle 0 # [-2 ,2

,2 ]

exposedField SFFloat offset 0 # (-

]

exposedField SFFloat offset 0 # (- ,

, )

eventOut SFBool isActive

eventOut SFRotation rotation_changed

eventOut SFVec3f trackPoint_changed

}

)

eventOut SFBool isActive

eventOut SFRotation rotation_changed

eventOut SFVec3f trackPoint_changed

}

The CylinderSensor node maps pointer motion (e.g., a mouse or wand) into a rotation on an invisible cylinder that is aligned with the Y-axis of the local coordinate system. The CylinderSensor uses the descendent geometry of its parent node to determine whether it is liable to generate events.

The enabled exposed field enables and disables the CylinderSensor node. If TRUE, the sensor reacts appropriately to user events. If FALSE, the sensor does not track user input or send events. If enabled receives a FALSE event and isActive is TRUE, the sensor becomes disabled and deactivated, and outputs an isActive FALSE event. If enabled receives a TRUE event the sensor is enabled and ready for user activation.

A CylinderSensor node generates events when the pointing device is activated while the pointer is indicating any descendent geometry nodes of the sensor's parent group. See "2.6.7.5 Activating and manipulating sensors" for more details on using the pointing device to activate the CylinderSensor.

Upon activation of the pointing device while indicating the sensor's geometry, an isActive TRUE event is sent. The initial acute angle between the bearing vector and the local Y-axis of the CylinderSensor node determines whether the sides of the invisible cylinder or the caps (disks) are used for manipulation. If the initial angle is less than the diskAngle, the geometry is treated as an infinitely large disk lying in the local Y=0 plane and coincident with the initial intersection point. Dragging motion is mapped into a rotation around the local +Y-axis vector of the sensor's coordinate system. The perpendicular vector from the initial intersection point to the Y-axis defines zero rotation about the Y-axis. For each subsequent position of the bearing, a rotation_changed event is sent that equals the sum of the rotation about the +Y-axis vector (from the initial intersection to the new intersection) plus the offset value. trackPoint_changed events reflect the unclamped drag position on the surface of this disk. When the pointing device is deactivated and autoOffset is TRUE, offset is set to the last value of rotation_changed and an offset_changed event is generated. Section "2.6.7.4 Drag sensors" provides a more general description of autoOffset and offset_changed.

If the initial acute angle between the bearing vector and the local Y-axis of the CylinderSensor node is greater than or equal to diskAngle, then the sensor behaves like a cylinder. The shortest distance between the point of intersection (between the bearing and the sensor's geometry) and the Y-axis of the parent group's local coordinate system determines the radius of an invisible cylinder used to map pointing device motion and marks the zero rotation value. For each subsequent position of the bearing, a rotation_changed event is sent that equals the sum of the right-handed rotation from the original intersection about the +Y-axis vector plus the offset value. trackPoint_changed events reflect the unclamped drag position on the surface of the invisible cylinder. When the pointing device is deactivated and autoOffset is TRUE, offset is set to the last rotation angle and an offset_changed event is generated. More details are available in "2.6.7.4 Drag sensors."

When the sensor generates an isActive TRUE event, it grabs all further motion events from the pointing device until it is released and generates an isActive FALSE event (other pointing-device sensors cannot generate events during this time). Motion of the pointing device while isActive is TRUE is referred to as a "drag." If a 2D pointing device is in use, isActive events will typically reflect the state of the primary button associated with the device (i.e., isActive is TRUE when the primary button is pressed and FALSE when it is released). If a 3D pointing device (e.g., a wand) is in use, isActive events will typically reflect whether the pointer is within or in contact with the sensor's geometry.

While the pointing device is activated, trackPoint_changed and rotation_changed events are output and are interpreted from pointing device motion based on the sensor's local coordinate system at the time of activation. trackPoint_changed events represent the unclamped intersection points on the surface of the invisible cylinder or disk. If the initial angle results in cylinder rotation (as opposed to disk behaviour) and if the pointing device is dragged off the cylinder while activated, browsers may interpret this in a variety of ways (e.g. clamp all values to the cylinder and continuing to rotate as the point is dragged away from the cylinder). Each movement of the pointing device while isActive is TRUE generates trackPoint_changed and rotation_changed events.

The minAngle and maxAngle fields clamp rotation_changed

events to a range of values. If minAngle is greater than maxAngle, rotation_changed

events are not clamped. The minAngle and maxAngle fields

are restricted to the range [-2![]() , 2

, 2![]() ].

].

Further information about this behaviour may be found in "2.6.7.3 Pointing-device sensors", "2.6.7.4 Drag sensors", and "2.6.7.5 Activating and manipulating sensors."

It is usually a bad idea to route a drag sensor to its own parent. Typically, the drag sensor will route to a Transform, which does not affect the sensor. See the following examples.

SphereSensor and CylinderSensor map the 2D motions of a mouse (or other pointing device) into 3D rotations. CylinderSensor constrains the rotation to a single axis, while SphereSensor allows arbitrary rotation.

A CylinderSensor is not useful by itself; you must also specify some geometry to act as the "knob" and must do something with the rotation_changed events. Usually, the geometry will be put into a Transform node and the rotation_changed events will be sent to the Transform's set_rotation eventIn, so that the geometry rotates as the user manipulates the CylinderSensor. For example:

#VRML V2.0 utf8

Group { children [

DEF CS CylinderSensor { }